Navigating the Moral Maze of Automated Decisions

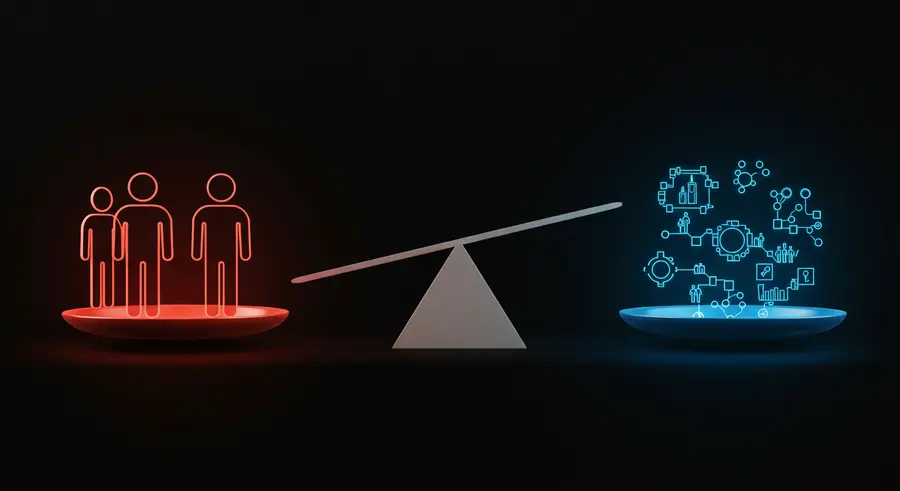

Algorithmic decision-making systems are increasingly woven into the fabric of our daily lives. From determining loan eligibility and suggesting news articles to influencing hiring processes and even judicial sentencing, algorithms hold significant power. While these systems offer efficiency and the potential for objectivity, they also carry inherent risks, primarily the perpetuation and amplification of societal biases. Ensuring these algorithms operate ethically is one of the most pressing challenges of our time.

What is Algorithmic Bias? Unpacking the Sources

Algorithmic bias refers to systematic and repeatable errors in a computer system that create unfair outcomes, such as privileging one arbitrary group of users over others. Bias can creep into algorithms in several ways:

- Data Bias: If the data used to train an algorithm reflects existing societal biases (e.g., historical underrepresentation of women in STEM fields), the algorithm will likely learn and perpetuate these biases. This is often the most significant source.

- Algorithmic Design Bias: Choices made during the algorithm's design, such as the features selected or the model architecture, can inadvertently introduce bias.

- Human Interaction Bias: How users interact with algorithmic outputs can create feedback loops that reinforce biases. For example, if users predominantly click on certain types of algorithmically recommended content, the system may over-represent that content in the future.

- Evaluation Bias: The metrics used to evaluate an algorithm's performance might not capture its fairness across different demographic groups.

For deeper insights into bias in AI, organizations like the AI Now Institute conduct critical research in this area.

The Societal Impact: When Algorithms Discriminate

The consequences of biased algorithms can be far-reaching and detrimental:

- Employment: Biased hiring algorithms can systematically filter out qualified candidates from underrepresented groups, limiting diversity and equal opportunity.

- Finance: Algorithmic credit scoring systems might unfairly deny loans or offer worse terms to individuals based on proxies for race or socioeconomic status.

- Criminal Justice: Predictive policing tools and risk assessment algorithms used in sentencing have been shown to disproportionately target minority communities and perpetuate cycles of incarceration.

- Healthcare: Algorithms used for diagnosis or treatment recommendations could perform less accurately for certain demographic groups if trained on unrepresentative data.

- Information Access: Biased content recommendation algorithms can create filter bubbles and echo chambers, limiting exposure to diverse perspectives and potentially amplifying misinformation.

Strategies for Mitigation: Towards Fairer AI

Addressing algorithmic bias requires a multi-faceted approach:

- Diverse and Representative Data: Actively collecting and curating diverse datasets that accurately represent the populations an algorithm will affect is crucial. This includes efforts to identify and correct historical biases within the data.

- Fairness Metrics and Audits: Developing and employing robust fairness metrics to assess algorithmic performance across different subgroups. Regular audits by independent bodies can help identify and rectify biases. Organizations like the Partnership on AI work on establishing best practices.

- Transparency and Explainability (XAI): Making algorithms more transparent and their decisions explainable is key. If we understand *how* an algorithm arrives at a decision, it's easier to identify and correct biases.

- Human Oversight and Intervention: Maintaining human oversight in critical decision-making processes where algorithms are used can provide a crucial check against automated bias. Humans should have the ability to override or question algorithmic outputs.

- Ethical Frameworks and Regulation: Establishing clear ethical guidelines and regulatory frameworks for the development and deployment of AI systems. This includes defining accountability for algorithmic harm.

- Diverse Development Teams: Ensuring that the teams building AI systems are diverse in terms of gender, ethnicity, background, and expertise can help identify potential biases early in the design process.

The Role of Regulation and Ethical Governance

Governments and international bodies are beginning to grapple with the need for AI regulation. Initiatives like the EU AI Act aim to create a legal framework for trustworthy AI, categorizing AI systems by risk and imposing stricter requirements on high-risk applications. Effective data governance within organizations is also paramount, ensuring that ethical considerations are embedded throughout the AI lifecycle.

Future Directions: A Continuous Pursuit of Fairness

The field of algorithmic fairness is rapidly evolving. Researchers are exploring new techniques for bias detection and mitigation, developing more nuanced fairness definitions, and considering the long-term societal effects of AI. The journey towards truly ethical and unbiased algorithmic decision-making is ongoing and requires continuous vigilance, interdisciplinary collaboration, and a commitment to upholding human values.

By consciously addressing the ethical dimensions of algorithmic systems, we can harness their power for good while minimizing the risks of perpetuating inequality and discrimination.